Predictive Models of Neurons in Sensory Cortex

The brain represents stimuli in the world via patterns of neural firing. These representations are really interesting because they have to be:

1. Sensitive enough to support rich behavioral abstractions (eg. emotions evident from human facial expressions)

2. ... but robust enough to work correctly despite the huge amount of variation and noise present in the real world.

One of our key goals is to understand how the brain achieves this delicate balance. Ultimately, we want to build detailed, predictive, end-to-end models of various sensory systems that are generated from compelling first-principles accounts of what each sensory system functionally does. So far, my specific projects in this direction include:

The brain represents stimuli in the world via patterns of neural firing. These representations are really interesting because they have to be:

1. Sensitive enough to support rich behavioral abstractions (eg. emotions evident from human facial expressions)

2. ... but robust enough to work correctly despite the huge amount of variation and noise present in the real world.

One of our key goals is to understand how the brain achieves this delicate balance. Ultimately, we want to build detailed, predictive, end-to-end models of various sensory systems that are generated from compelling first-principles accounts of what each sensory system functionally does. So far, my specific projects in this direction include:

Performance-optimized hierarchical models predict neural responses in higher visual cortex (with Ha Hong, Charles Cadieu, Darren Seibert, and Jim DiCarlo)

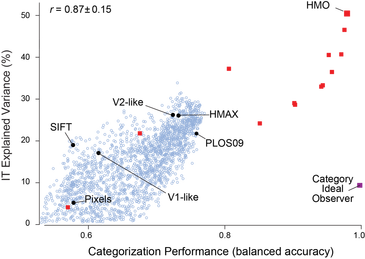

The ventral visual stream underlies key human visual object recognition abilities. However, neural encoding in the higher areas of the ventral stream remains poorly understood. Using high-throughput computational techniques, we discovered that, within a class of biologically plausible hierarchical neural network models, there is a strong correlation between a model’s categorization performance and its ability to predict individual neural unit response data in visual cortex.

Critically, even though we did not constrain our models to match neural data, the top layers of high-performing models turn out to be highly predictive of IT spiking responses to complex naturalistic images, while intermediate layers are highly predictive of responses in V4 cortex, a midlevel visual area that provides the dominant cortical input to IT. More recently, we've used functional imaging data to compare models to brain regions across the ventral stream, finding that lower model layers predict voxel-level responses in early ventral brain regions, such V1.

The ventral visual stream underlies key human visual object recognition abilities. However, neural encoding in the higher areas of the ventral stream remains poorly understood. Using high-throughput computational techniques, we discovered that, within a class of biologically plausible hierarchical neural network models, there is a strong correlation between a model’s categorization performance and its ability to predict individual neural unit response data in visual cortex.

Critically, even though we did not constrain our models to match neural data, the top layers of high-performing models turn out to be highly predictive of IT spiking responses to complex naturalistic images, while intermediate layers are highly predictive of responses in V4 cortex, a midlevel visual area that provides the dominant cortical input to IT. More recently, we've used functional imaging data to compare models to brain regions across the ventral stream, finding that lower model layers predict voxel-level responses in early ventral brain regions, such V1.

Optimizing deep neural networks for speech recognition yields predictive models of neural responses in human auditory cortex (with Alex Kell, Sam Norman-Haignere, and Josh McDermott.)

Given the ability of neural networks optimized for object recognition to predict neural responses in the ventral visual stream, we wondered whether a similar principle might be operative in other sensory domains. In an ongoing project, we are optimizing neural networks for performance on a challenging speech-recognition task and comparing the output of these models to neuroimaging data in auditory cortex. Here are our goal is to use intermediate model layers to predict responses in a major known auditory cortex area -- namely primary auditory -- and then use higher layers to look for novel phoneme, word, and speech areas beyond primary cortex.

Given the ability of neural networks optimized for object recognition to predict neural responses in the ventral visual stream, we wondered whether a similar principle might be operative in other sensory domains. In an ongoing project, we are optimizing neural networks for performance on a challenging speech-recognition task and comparing the output of these models to neuroimaging data in auditory cortex. Here are our goal is to use intermediate model layers to predict responses in a major known auditory cortex area -- namely primary auditory -- and then use higher layers to look for novel phoneme, word, and speech areas beyond primary cortex.